When you purchase through links on our site, we may earn an affiliate commission.Heres how it works.

Applesays it plans on introducing generative AI features to iPhones later this year.

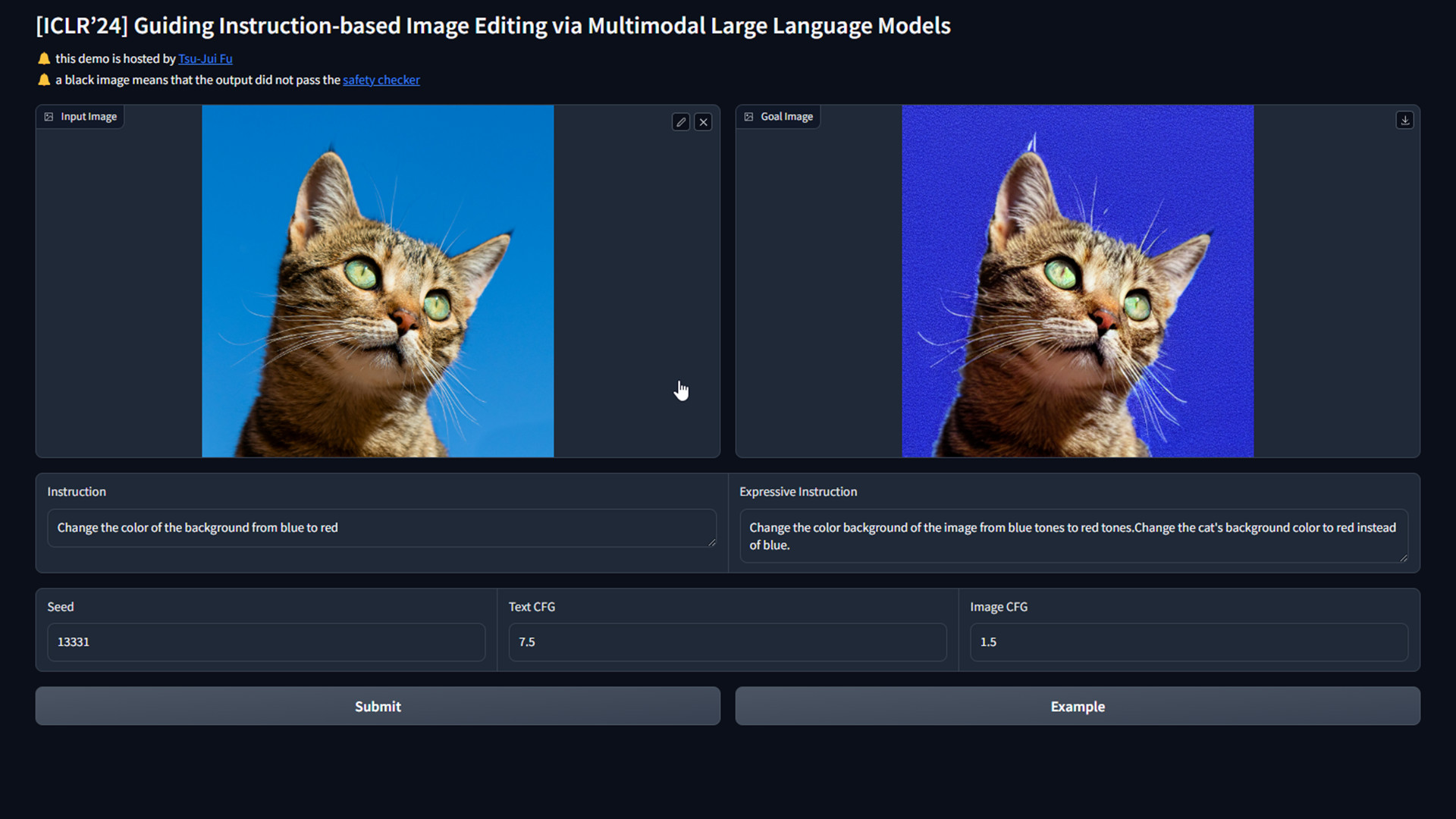

Its called MGIE, or MLLM-Guided (multimodal large language model) Image Editing.

(Image credit: Cédric VT/Unsplash/Apple)

The tech is theresult of a collaborationbetweenAppleand researchers from the University of California, Santa Barbara.

Public demonstration

The way MGIE works is pretty straightforward.

VentureBeat says people will need to provide explicit guidance.

(Image credit: Cédric VT/Unsplash/Apple)

Youll have to guide it further to get the results you want.

MGIE is currently available onGitHub as an open-source project.

Theres also aweb demo available to the publicon the collaborative tech platform Hugging Face.

(Image credit: Cédric VT/Unsplash/Apple)

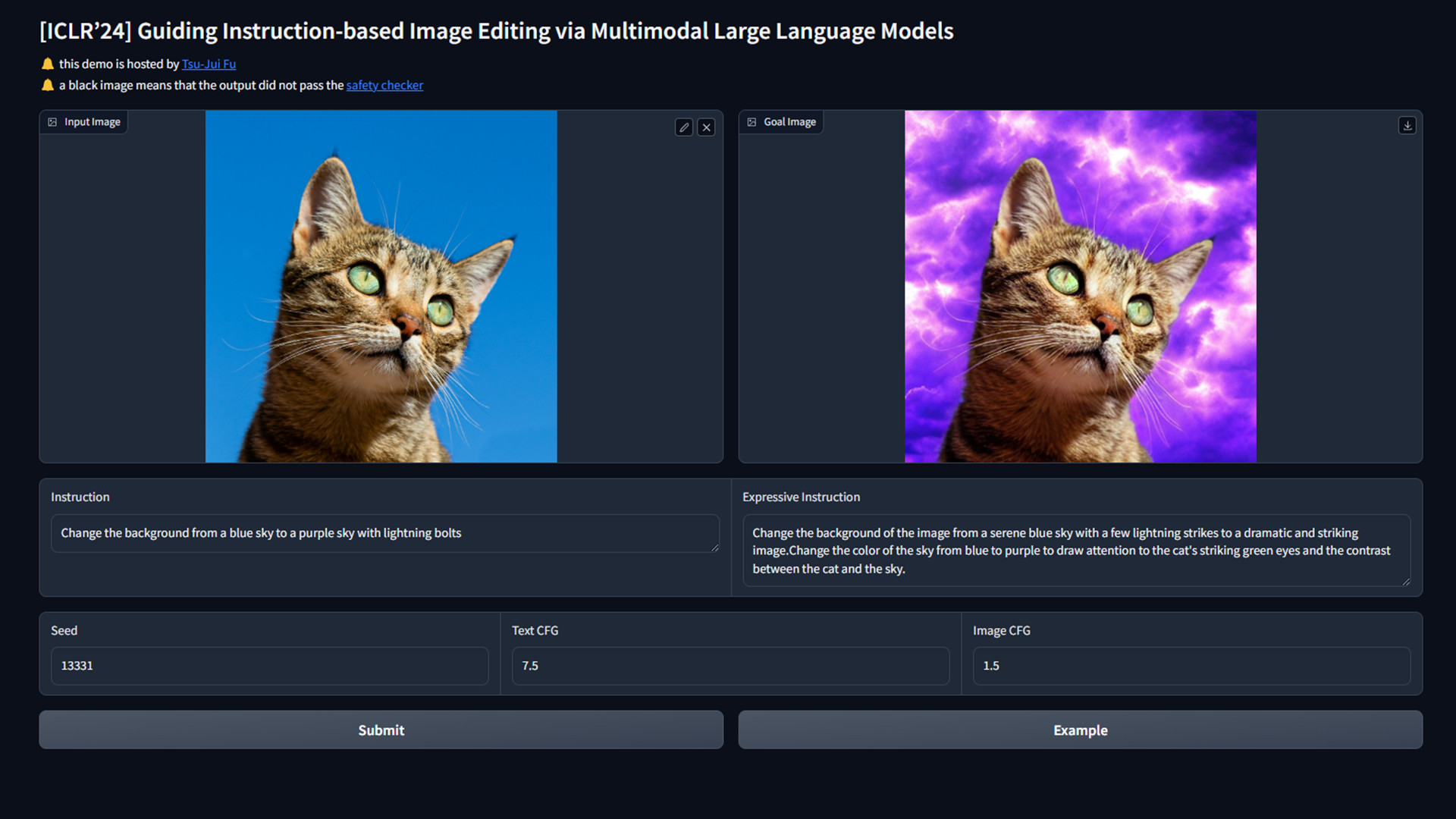

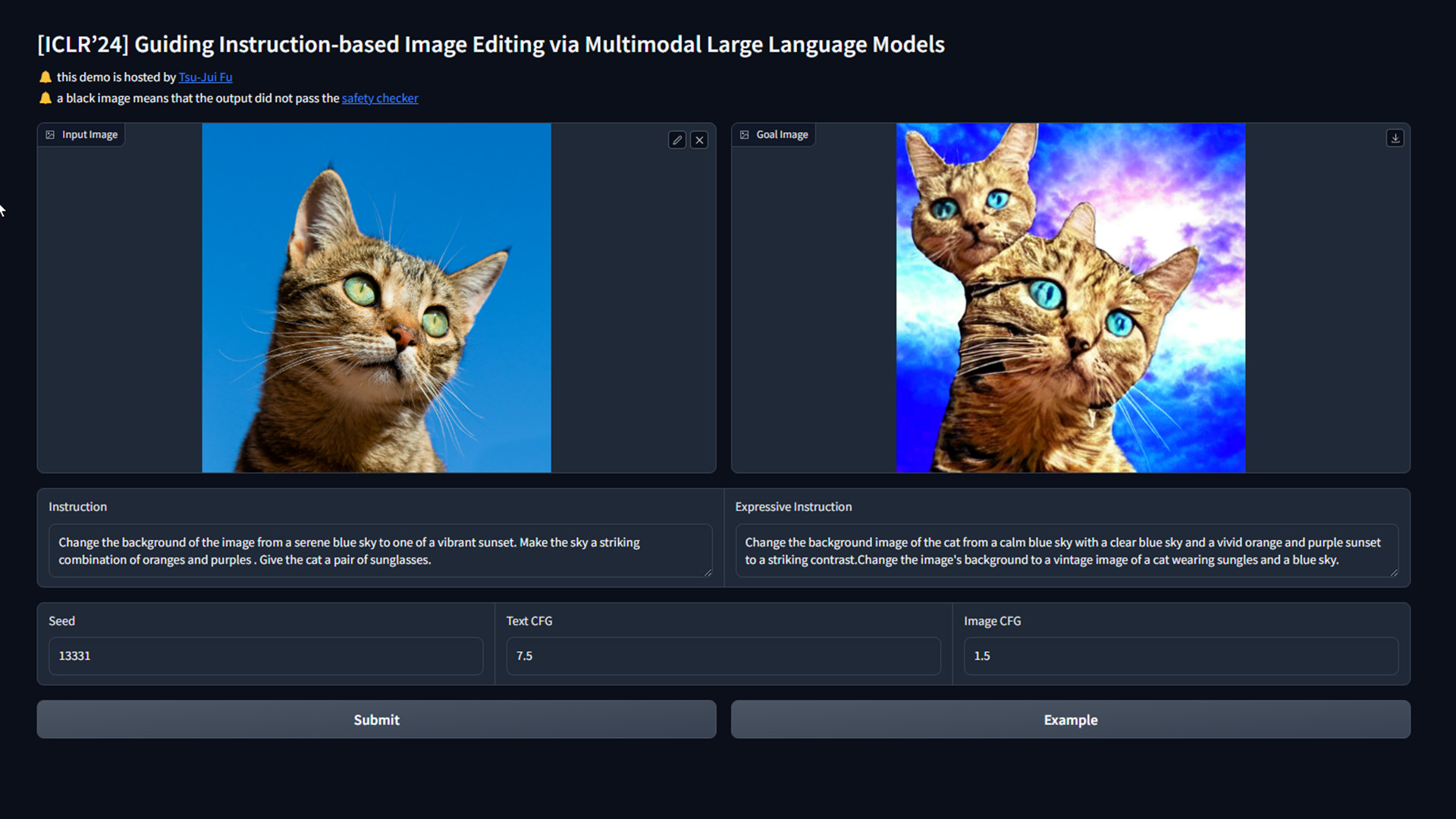

With access to this demo, we decided to take Apples AI out for a spin.

And in our experience, it did okay.

In one instance, we told it to change the background from blue to red.

(Image credit: Cédric VT/Unsplash/Apple)

However, MGIE instead made the background a darker shade of blue with static-like texturing.

There doesn’t appear to be any difference between the two.

MGIE is still a work in progress.

Outputs are not perfect.

One of the sample images shows the kitten turn into a monstrosity.

But we do expect all the bugs to be worked out down the line.

If you prefer a more hands-on approach, check outTechRadar’s guide on the best photo editors for 2024.